Program

Conference Track

Causal Inference and Machine Learning: Estimating and Evaluating Policies

Susan Athey - Stanford Graduate School of Business

In many contexts, a decision-making can choose to assign one of a number of "treatments" to individuals. The treatments may be drugs, offers, advertisements, algorithms, or government programs. One setting for evaluating such treatments involves randomized controlled trials, for example A/B testing platforms or clinical trials. In such settings, we show how to optimize supervised machine learning methods for the problem of estimating heterogeneous treatment effects, while preserving a key desiderata of randomized trials, which is providing valid confidence intervals for estimates. We also discuss approaches for estimating optimal policies and online learning. In environments with observational (non-experimental) data, different methods are required to separate correlation from causality. We show how supervised machine learning methods can be adapted to this problem.

Susan Athey is The Economics of Technology Professor at Stanford Graduate School of Business. She received her bachelor's degree from Duke University and her Ph.D. from Stanford, and she holds an honorary doctorate from Duke University. She previously taught at the economics departments at MIT, Stanford and Harvard. In 2007, Professor Athey received the John Bates Clark Medal, awarded by the American Economic Association to "that American economist under the age of forty who is adjudged to have made the most significant contribution to economic thought and knowledge." She was elected to the National Academy of Science in 2012 and to the American Academy of Arts and Sciences in 2008. Professor Athey’s research focuses on the economics of the internet, online advertising, the news media, marketplace design, virtual currencies and the intersection of computer science, machine learning and economics. She advises governments and businesses on marketplace design and platform economics, notably serving since 2007 as a long-term consultant to Microsoft Corporation in a variety of roles, including consulting chief economist.

I will describe the "Automatic Statistician" (http://www.automaticstatistician.com/), a project which aims to automate the exploratory analysis and modelling of data. Our approach starts by defining a large space of related probabilistic models via a grammar over models, and then uses Bayesian marginal likelihood computations to search over this space for one or a few good models of the data. The aim is to find models which have both good predictive performance, and are somewhat interpretable. The Automatic Statistician generates a natural language summary of the analysis, producing a 10-15 page report with plots and tables describing the analysis. I will also link this to recent work we have been doing in the area of Probabilistic Programming (including an new system in Julia) to automate inference, and on the rational allocation of computational resources (and our entry in the AutoML conference).

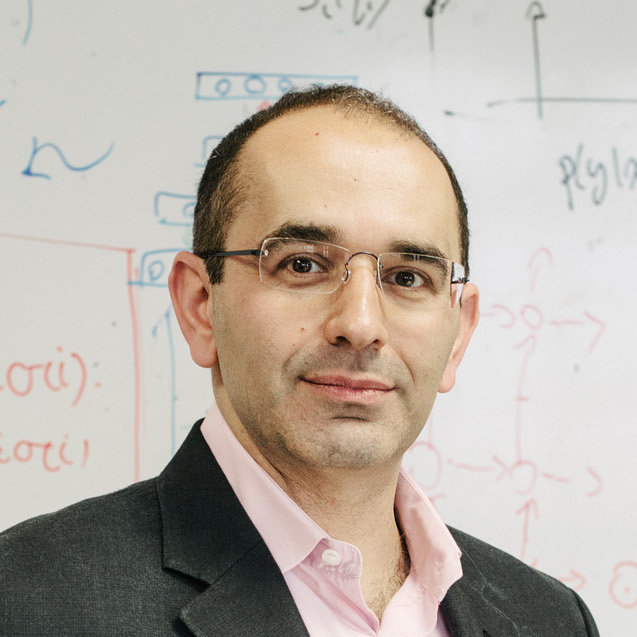

Zoubin Ghahramani FRS is Professor of Information Engineering at the University of Cambridge, where he leads the Machine Learning Group, and the Cambridge Liaison Director of the Alan Turing Institute, the UK’s national institute for Data Science. He studied computer science and cognitive science at the University of Pennsylvania, obtained his PhD from MIT in 1995, and was a postdoctoral fellow at the University of Toronto. His academic career includes concurrent appointments as one of the founding members of the Gatsby Computational Neuroscience Unit in London, and as a faculty member of CMU's Machine Learning Department for over 10 years. His current research interests include statistical machine learning, Bayesian nonparametrics, scalable inference, probabilistic programming, and building an automatic statistician. He has published over 250 research papers, and has held a number of leadership roles as programme and general chair of the leading international conferences in machine learning including: AISTATS (2005), ICML (2007, 2011), and NIPS (2013, 2014). In 2015 he was elected a Fellow of the Royal Society.

AlphaGo - Mastering the Game of Go with Deep Neural Networks and Tree Search

Thore Graepel - Google DeepMind and University College London

The game of Go has long been viewed as the most challenging of classic games for artificial intelligence owing to its enormous search space and the difficulty of evaluating board positions and moves. Here we introduce a new approach to computer Go that uses 'value networks' to evaluate board positions and ‘policy networks’ to select moves. These deep neural networks are trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play.

Using this search algorithm, our program AlphaGo achieved a 99.8% winning rate against other Go programs and beat the human European Go champion Fan Hui by 5 games to 0, a feat thought to be at least a decade away by Go and AI experts alike. Finally, in a dramatic and widely publicised match, AlphaGo defeated Lee Sedol, the top player of the past decade, 4 games to 1.

In this talk, I will explain how AlphaGo works, describe our process of evaluation and improvement, and discuss what we can learn about computational intuition and creativity from the way AlphaGo plays.

Thore Graepel is a research group lead at Google DeepMind and holds a part-time position as Chair of Machine Learning at University College London. He studied physics at the University of Hamburg, Imperial College London, and Technical University of Berlin, where he also obtained his PhD in machine learning in 2001. He spent time as a postdoctoral researcher at ETH Zurich and Royal Holloway College, University of London, before joining Microsoft Research in Cambridge in 2003, where he co-founded the Online Services and Advertising group. Major applications of Thore’s work include Xbox Live’s TrueSkill system for ranking and matchmaking, the AdPredictor framework for click-through rate prediction in Bing, and the Matchbox recommender system which inspired the recommendation engine of Xbox Live Marketplace. More recently, Thore’s work on the predictability of private attributes from digital records of human behaviour has been the subject of intense discussion among privacy experts and the general public. Thore’s current research interests include probabilistic graphical models and inference, reinforcement learning, games, and multi-agent systems. He has published over one hundred peer-reviewed papers, is a named co-inventor on dozens of patents, serves on the editorial boards of JMLR and MLJ, and is a founding editor of the book series Machine Learning & Pattern Recognition at Chapman & Hall/CRC. At DeepMind, Thore has returned to his original passion of understanding and creating intelligence, and recently contributed to creating AlphaGo, the first computer program to defeat a human professional player in the full-sized game of Go, a feat previously thought to be at least a decade away.

Sequences, Choices, and their Dynamics ( slides)

Ravi Kumar - Google

Sequences arise in many online and offline settings: urls to visit, songs to listen to, videos to watch, restaurants to dine at, and so on. User-generated sequences are tightly related to mechanisms of choice, where a user must select one from a finite set of alternatives. In this talk, we will discuss a class of problems arising from studying such sequences and the role discrete choice theory plays in these problems. We will present modeling and algorithmic approaches to some of these problems and illustrate them in the context of large-scale data analysis.

Ravi Kumar has been a senior staff research scientist at Google since 2012. Prior to this, he was a research staff member at the IBM Almaden Research Center and a principal research scientist at Yahoo! Research. His research interests include Web search and data mining, algorithms for massive data, and the theory of computation.

Dimensionality reduction with certainty ( slides)

Rasmus Pagh - IT University of Copenhagen

Tool such as Johnson-Lindenstrauss dimensionality reduction and 1-bit minwise hashing have been successfully used to transform problems involving very high-dimensional real vectors into lower-dimensional equivalents, at the cost of introducing a random distortion of distances/similarities among vectors. While this can alleviate the computational cost associated with high dimensionality, the effect on the outcome of the computation (compared to working on the original vectors) can be hard to analyze and interpret. For example, the behavior of a basic kNN classifier is easy to describe and interpret, but if the algorithm is run on dimension-reduced vectors with distorted distances it is much less transparent what is happening.

The talk starts with an introduction to randomized (data-independent) dimensionality reduction methods and gives some example applications in machine learning. Based on recent work in the theoretical computer science community we describe tools for dimension reduction that give stronger guarantees on approximation, replacing probabilistic bounds on distance/similarity with bounds that hold with certainty. For example, we describe a "distance sensitive Bloom filter": a succinct representation of high-dimensional boolean vectors that can identify vectors within distance r with certainty, while far vectors are only thought to be close with a small "false positive" probability. We also discuss work towards a deterministic alternative to random feature maps (i.e., dimension-reduced vectors from a high-dimensional feature space), and settings in which a pair of dimension-reducing mappings outperform single-mapping methods. While there are limits to what performance can be achieved with certainty, such techniques may be part of the toolbox for designing transparent and scalable machine learning and knowledge discovery methods.

Rasmus Pagh graduated from Aarhus University in 2002, and is now a full professor at the IT University of Copenhagen. His work is centered around efficient algorithms for big data, with an emphasis on randomized techniques. His publications span theoretical computer science, databases, information retrieval, knowledge discovery, and parallel computing. His most well-known work is the cuckoo hashing algorithm (2001), which has led to new developments in several fields. In 2014 he received the best paper award at the WWW Conference for a paper with Pham and Mitzenmacher on similarity estimation, and started a 5-year research project funded by the European Research Council on scalable similarity search.

Human decisions are heavily influenced by social interaction, so that predicting or influencing individual behavior requires modeling these interaction effects. In addition the distributed learning strategies exhibited by human communities suggest methods of improving both machine learning and human-machine systems. Several practical examples will be described.

Professor Alex "Sandy" Pentland directs the MIT Connection Science and Human Dynamics labs and previously helped create and direct the MIT Media Lab and the Media Lab Asia in India. He is one of the most-cited scientists in the world, and Forbes recently declared him one of the "7 most powerful data scientists in the world" along with Google founders and the Chief Technical Officer of the United States. He has received numerous awards and prizes such as the McKinsey Award from Harvard Business Review, the 40th Anniversary of the Internet from DARPA, and the Brandeis Award for work in privacy.

He is a founding member of advisory boards for Google, AT&T, Nissan, and the UN Secretary General, a serial entrepreneur who has co-founded more than a dozen companies including social enterprises such as the Data Transparency Lab, the Harvard-ODI-MIT DataPop Alliance and the Institute for Data Driven Design. He is a member of the U.S. National Academy of Engineering and leader within the World Economic Forum.

Industrial Track

The next decade will see a deep transformation of industrial applications by big data analytics, machine learning and the internet of things. Industrial applications have a number of unique features, setting them apart from other domains. Central for many industrial applications in the internet of things is time series data generated by often hundreds or thousands of sensors at a high rate, e.g. by a turbine or a smart grid. In a first wave of applications this data is centrally collected and analyzed in Map-Reduce or streaming systems for condition monitoring, root cause analysis, or predictive maintenance. The next step is to shift from centralized analysis to distributed in-field or in situ analytics, e.g in smart cities or smart grids. The final step will be a distributed, partially autonomous decision making and learning in massively distributed environments.

In this talk I will give an overview on Siemens’ journey through this transformation, highlight early successes, products and prototypes and point out future challenges on the way towards machine intelligence. I will also discuss architectural challenges for such systems from a Big Data point of view.

Michael May is Head of the Technology Field Business Analytics & Monitoring at Siemens Corporate Technology, Munich, and responsible for eleven research groups in Europe, US, and Asia. Michael is driving research at Siemens in data analytics, machine learning and big data architectures. In the last two years he was responsible for creating the Sinalytics platform for Big Data applications across Siemens’ business.

Before joining Siemens in 2013, Michael was Head of the Knowledge Discovery Department at the Fraunhofer Institute for Intelligent Analysis and Information Systems in Bonn, Germany. In cooperation with industry he developed Big Data Analytics applications in sectors ranging from telecommunication, automotive, and retail to finance and advertising.

Between 2002 and 2009 Michael coordinated two Europe-wide Data Mining Research Networks (KDNet, KDubiq). He was local chair of ICML 2005, ILP 2005 and program chair of the ECML/PKDD Industrial Track 2015. Michael did his PhD on machine discovery of causal relationships at the Graduate Programme for Cognitive Science at the University of Hamburg.

At Amazon, some of the world's largest and most diverse problems in e-commerce, logistics, digital content management, and cloud computing services are being addressed by machine learning on behalf of our customers. In this talk, I will give an overview of a number of key areas and associated machine learning challenges.

Matthias Seeger got his PhD from Edinburgh. He had academic appointments at UC Berkeley, MPI Tuebingen, Saarbruecken, and EPF Lausanne. Currently, he is a principal applied scientist at Amazon in Berlin. His interests are in Bayesian methods, large scale probabilistic learning, active decision making and forecasting.

Accepted papers

Conference Track

-

Room: 300B2016-09-20; 15:10 - 15:30

-

Authors:

- Jari Fowkes (University of Edinburgh)

- Charles Sutton (University of Edinburgh)

-

Abstract:

Mining itemsets that are the most interesting under a statistical model of the underlying data is a commonly used and well-studied technique for exploratory data analysis, with the most recent interestingness models exhibiting state of the art performance. Continuing this highly promising line of work, we propose the first, to the best of our knowledge, generative model over itemsets, in the form of a Bayesian network, and an associated novel measure of interestingness. Our model is able to efficiently infer interesting itemsets directly from the transaction database using structural EM, in which the E-step employs the greedy approximation to weighted set cover. Our approach is theoretically simple, straightforward to implement, trivially parallelizable and retrieves itemsets whose quality is comparable to, if not better than, existing state of the art algorithms as we demonstrate on several real-world datasets.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_26

-

Room: 300B2016-09-20; 16:40 - 17:00

-

Authors:

- Dhouha Grissa (INRA)

- Blandine Comte (INRA)

- Estelle Pujos Guillot (INRA)

- Amedeo Napoli (Inria Nancy Grand Est / LORIA)

-

Abstract:

The analysis of complex biological data deriving from metabolomic analytical platforms is a challenge. In this paper, we propose a new approach for processing massive and complex metabolomic data generated by such platforms with appropriate methods for discovering meaningful biological patterns. The analyzed datasets are constituted of a limited set of individuals and a large set of attributes or features where predictive makers of clinical outcomes should be mined. The experiments are based on a hybrid knowledge discovery process combining numerical methods such as SVM, Random Forests (RF) and ANOVA, with a symbolic method, such as Formal Concept Analysis (FCA). The outputs of these experiments highlight the best potential predictive biomarkers of metabolic diseases and the fact that RF and ANOVA are the most suited methods for feature selection and discovery. The visualization of predictive biomarkers is based on correlation graphs and heatmaps while FCA is used for visualizing the best feature selection methods within a concept lattice easily interpretable by an expert.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_36

-

Room: 300A2016-09-20; 11:20 - 11:40

-

Authors:

- Takoua Kefi (Higher School of Communication)

- Riadh Ksantini (University of Windsor, 401, Sunset Avenue, Windsor, ON, Canada)

- Mohamed Kaâniche (Higher School of Communication of Tunis, Tunisia)

- Adel Bouhoula (Higher School of Communication of Tunis, Tunisia)

-

Abstract:

Covariance-guided One-Class Support Vector Machine (COSVM) is a very competitive kernel classifier, as it emphasizes the low variance projectional directions of the training data, which results in high accuracy. However, COSVM training involves solving a constrained convex optimization problem, which requires large memory and enormous amount of training time, especially for large scale datasets. Moreover, it has difficulties in classifying sequentially obtained data. For these reasons, this paper introduces an incremental COSVM method by controlling the possible changes of support vectors after the addition of new data points. The control procedure is based on the relationship between the Karush-Kuhn-Tuker conditions of COSVM and the distribution of the training set. Comparative experiments have been carried out to show the effectiveness of our proposed method, both in terms of execution time and classification accuracy. Incremental COSVM results in better classification performance when compared to canonical COSVM and contemporary incremental one-class classifiers.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_2

-

Room: 300A2016-09-20; 12:40 - 13:00

-

Authors:

- Shigeyuki Odashima (Fujitsu Laboratories LTD.)

- Miwa Ueki (Fujitsu Laboratories LTD.)

- Naoyuki Sawasaki (Fujitsu Laboratories LTD.)

-

Abstract:

We present an extension of the DP-means algorithm, a hard-clustering approximation of nonparametric Bayesian models. Though a recent work reports that the DP-means can converge to a local minimum, the condition when the DP-means converges to a local minimum is still unknown. This paper demonstrates that one reason why the DP-means converges to a local minimum: the DP-means cannot assign the optimal number of clusters when many data points exist within small distances. As a first attempt to avoid the local minimum, we propose an extension of the DP-means with the split-merge technique. The proposed algorithm splits clusters when the cluster has many data points to assign the number of clusters near to optimal. The experimental results with multiple datasets show the robustness of the proposed algorithm.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_5

-

Room: 300B2016-09-20; 11:40 - 12:00

-

Authors:

- Phillip Odom

- Sriraam Natarajan (Indiana University)

-

Abstract:

Machine learning approaches that utilize human experts combine domain experience with data to generate novel knowledge. Unfortunately, most methods either provide only a limited form of communication with the human expert and/or are overly reliant on the human expert to specify their knowledge upfront. Thus, the expert is unable to understand what the system could learn without the involvement of him/her. Allowing the learning algorithm to query the human expert in the most useful areas of the feature space takes full advantage of the data as well as the expert. We introduce active advice-seeking for relational domains. Relational logic allows for compact, but expressive interaction between the human expert and the learning algorithm. We demonstrate our algorithm empirically on several standard relational datasets.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_33

-

Room: 1000A2016-09-20; 11:20 - 11:40

-

Authors:

- Nitish Shirish Keskar (Northwestern University)

- Albert Berahas (Northwestern University)

-

Abstract:

Recurrent Neural Networks (RNNs) are powerful models that achieve exceptional performance on a plethora pattern recognition problems. However, the training of RNNs is a computationally difficult task owing to the well-known "vanishing/exploding" gradient problem. Algorithms proposed for training RNNs either exploit no (or limited) curvature information and have cheap per-iteration complexity, or attempt to gain significant curvature information at the cost of increased per-iteration cost. The former set includes diagonally-scaled first-order methods such as ADAGRAD and ADAM, while the latter consists of second-order algorithms like Hessian-Free Newton and K-FAC. In this paper, we present adaQN, a stochastic quasi-Newton algorithm for training RNNs. Our approach retains a low per-iteration cost while allowing for non-diagonal scaling through a stochastic L-BFGS updating scheme. The method uses a novel L-BFGS scaling initialization scheme and is judicious in storing and retaining L-BFGS curvature pairs. We present numerical experiments on two language modeling tasks and show that adaQN is competitive with popular RNN training algorithms.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_1

-

Room: 300A2016-09-20; 17:40 - 18:00

-

Authors:

- Xiawei Guo (HKUST)

- James Kwok (HKUST)

-

Abstract:

Crowdsourcing allows the collection of labels from a crowd of workers at low cost. In this paper, we focus on ordinal labels, whose underlying order is important. However, the labels can be noisy as there may be amateur workers, spammers and/or even malicious workers. Moreover, some workers/items may have very few labels, making the estimation of their behavior difficult. To alleviate these problems, we propose a novel Bayesian model that clusters workers and items together using the nonparametric Dirichlet process priors. This allows workers/items in the same cluster to borrow strength from each other. Instead of directly computing the posterior of this complex model, which is infeasible, we propose a new variational inference procedure. Experimental results on a number of real-world data sets show that the proposed algorithm are more accurate than the state-of-the-art.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_27

-

Room: 300B2016-09-22; 14:50 - 15:10

-

Authors:

- John AOGA (INGI/ICTEAM/SST/UCL)

- Pierre Schaus (ICTEAM/SST/UCL)

- Tias Guns

-

Abstract:

The main advantage of Constraint Programming (CP) approaches for sequential pattern mining (SPM) is their modularity, which includes the ability to add new constraints (regular expressions, length restrictions, etc). The current best CP approach for SPM uses a global constraint (module) that computes the projected database and enforces the minimum frequency; it does this with a filtering algorithm similar to the PrefixSpan method. However, the resulting system is not as scalable as some of the most advanced mining systems like Zaki’s cSPADE. We show how, using techniques from both data mining and constraint programming, one can use a generic constraint solver and yet outperform existing specialized systems. This is mainly due to two improvements in the module that computes the projected frequencies: first, computing the projected database can be sped up by pre-computing the positions at which an item can become unsupported by a sequence, thereby avoiding to scan the full sequence each time; and second by taking inspiration from the trailing used in CP solvers to devise a backtracking-aware data structure that allows fast incremental storing and restoring of the projected database. Detailed experiments show how this approach outperforms existing CP as well as specialized systems for SPM, and that the gain in efficiency translates directly into increased efficiency for constraint-based settings such as mining with regular expressions too.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_20

- Download links: Code Data

-

Room: 1000B2016-09-20; 16:40 - 17:00

-

Authors:

- Yongdai Kim

- Minwoo Chae

- Kuhwan Jeong (Seoul National University)

- Byungyup Kang

- Hyoju Chung

-

Abstract:

The hierarchical Dirichlet processes (HDP) is a Bayesian nonparametric model that provides a flexible mixed-membership to documents. In this paper, we develop a novel mini-batch online Gibbs sampler algorithm for the HDP which can be easily applied to massive and streaming data. For this purpose, a new prior process so called the generalized hierarchical Dirichlet processes (gHDP) is proposed. The gHDP is an extension of the standard HDP where some prespecified topics can be included in the top-level Dirichlet process. By analyzing various datasets, we show that the proposed mini-batch online Gibbs sampler algorithm performs significantly better than the online variational algorithm for the HDP.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_32

-

Room: 1000B2016-09-22; 12:00 - 12:20

-

Authors:

- Michelle Sebag (LRI-CNRS)

- Marc Schoenauer (INRIA)

- Riad Akrour (LRI)

- Basile Mayeur (LRI)

-

Abstract:

The Anti Imitation-based Policy Learning (AIPoL) approach, taking inspiration from the Energy-based learning framework (LeCun et al. 2006), aims at a pseudo-value function such that it induces the same local order on the state space as a (nearly optimal) value function. By construction, the greedification of such a pseudo-value induces the same policy as the value function itself. The approach assumes that, thanks to prior knowledge, not-to-be-imitated demonstrations can easily be generated. For instance, applying a random policy on a good initial state (e.g., a bicycle in equilibrium) will on average lead to visit states with decreasing values (the bicycle ultimately falls down). Such a demonstration, that is, a sequence of states with decreasing values, is used along a standard learning-to-rank approach to define a pseudo-value function. If the model of the environment is known, this pseudo-value directly induces a policy by greedification. Otherwise, the bad demonstrations are exploited together with off-policy learning to learn a pseudo-Q-value function and likewise thence derive a policy by greedification. To our best knowledge the use of bad demonstrations to achieve policy learning is original. The theoretical analysis shows that the loss of optimality of the pseudo value-based policy is bounded under mild assumptions, and the empirical validation of AIPoL on the mountain car, the bicycle and the swing-up pendulum problems demonstrates the simplicity and the merits of the approach.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_35

-

Room: 300A2016-09-20; 15:50 - 16:10

-

Authors:

- Yitong Li (BUPT)

- Chuan Shi

- Huidong Zhao

- Fuzhen Zhuang (Inst. of Com. Tech., CAS)

- Bin Wu

-

Abstract:

Due to the personalized needs for specific aspect evaluation on product quality, these years have witnessed a boom of researches on aspect rating prediction, whose goal is to extract ad hoc aspects from online reviews and predict rating or opinion on each aspect. Most of the existing works on aspect rating prediction have a basic assumption that the overall rating is the average score of aspect ratings or the overall rating is very close to aspect ratings. However, after analyzing real datasets, we have an insightful observation: there is an obvious rating bias between overall rating and aspect ratings. Motivated by this observation, we study the problem of aspect mining with rating bias, and design a novel RAting-center model with BIas (RABI). Different from the widely used review-center models, RABI adopts the overall rating as the center of the probabilistic model, which generates reviews and topics. In addition, a novel aspect rating variable in RABI is designed to effectively integrate the rating bias priori information. Experiments on two real datasets (Dianping and TripAdvisor) validate that RABI significantly improves the prediction accuracy over existing state-of-the-art methods.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_29

-

Room: 1000B2016-09-20; 11:00 - 11:20

-

Authors:

- Jiangtao Yin (University of Massachusetts Amherst)

- Lixin Gao (University of Massachusetts Amherst)

-

Abstract:

Graph algorithms have become an essential component in many real-world applications. An essential property of graphs is that they are often dynamic. Many applications must update the computation result periodically on the new graph so as to keep it up-to-date. Incremental computation is a promising technique for this purpose. Traditionally, incremental computation is typically performed synchronously, since it is easy to implement. In this paper, we illustrate that incremental computation can be performed asynchronously as well. Asynchronous incremental computation can bypass synchronization barriers and always utilize the most recent values, and thus it is more efficient than its synchronous counterpart. Furthermore, we develop a distributed framework, GraphIn, to facilitate implementations of incremental computation on massive evolving graphs. We evaluate our asynchronous incremental computation approach via extensive experiments on a local cluster as well as the Amazon EC2 cloud. The evaluation results show that it can accelerate the convergence speed by as much as 14x when compared to recomputation from scratch.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_45

-

Room: 1000A2016-09-21; 17:20 - 17:40

-

Authors:

- Shin Matsushima (The University of Tokyo)

-

Abstract:

We are interested in learning from large-scale datasets with a massive number of possible features in order to obtain more accurate predictors. Specifically, the goal of this paper is to perform effective learning within the L1 regularized risk minimization problems in terms of both time and space computational resources. This will be accomplished by concentrating on the effective features out of a large number of unnecessary features. In order to do this, we propose a multi-threaded scheme that simultaneously runs processes for developing seemingly important features in the main memory and updating parameters with respect to only the seemingly important features. We verified our method through computational experiments showing that our proposed scheme can handle terabyte scale optimization problems with one machine.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_38

-

Room: 1000B2016-09-22; 17:00 - 17:20

-

Authors:

- Kongming Liang (ICT,CAS,CHINA)

- Hong Chang (ICT,CAS,CHINA)

- Shiguang Shan (ICT,CAS,CHINA)

- Xilin Chen (ICT,CAS,CHINA)

-

Abstract:

Searching images with multi-attribute queries shows practical significance in various real world applications. The key problem in this task is how to effectively and efficiently learn from the conjunction of query attributes. In this paper, we propose Attribute Conjunction Recurrent Neural Network (AC-RNN) to tackle this problem. Attributes involved in a query are mapped into the hidden units and combined in a recurrent way to generate the representation of the attribute conjunction, which is then used to compute a multi-attribute classifier as the output. To mitigate the data imbalance problem of multi-attribute queries, we propose a data weighting procedure in attribute conjunction learning with small positive samples. We also discuss on the influence of attribute order in a query and present two methods based on attention mechanism and ensemble learning respectively to further boost the performance. Experimental results on aPASCAL, ImageNet Attributes and LFWA datasets show that our method consistently and significantly outperforms the other comparison methods on all types of queries.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_22

- Download links: Code

-

Room: 1000A2016-09-20; 11:00 - 11:20

-

Authors:

- Sheng Wang (University of Chicago)

- Siqi Sun (TTIC)

- Jinbo Xu (TTIC)

-

Abstract:

Deep Convolutional Neural Networks (DCNN) has shown excellent performance in a variety of machine learning tasks. This paper presents Deep Convolutional Neural Fields (DeepCNF), an integration of DCNN with Conditional Random Field (CRF), for sequence labeling with an imbalanced label distribution. The widely-used training methods, such as maximum-likelihood and maximum labelwise accuracy, do not work well on imbalanced data. To handle this, we present a new training algorithm called maximum-AUC for DeepCNF. That is, we train DeepCNF by directly maximizing the empirical Area Under the ROC Curve (AUC), which is an unbiased measurement for imbalanced data. To fulfill this, we formulate AUC in a pairwise ranking framework, approximate it by a polynomial function and then apply a gradient-based procedure to optimize it. We then test our AUC-maximized DeepCNF on three very different protein sequence labeling tasks: solvent accessibility prediction, 8-state secondary structure prediction, and disorder prediction. Our experimental results confirm that maximum-AUC greatly outperforms the other two training methods on 8-state secondary structure prediction and disorder prediction since their label distributions are highly imbalanced and also has similar performance as the other two training methods on solvent accessibility prediction, which has three equally-distributed labels. Furthermore, our experimental results show that our AUC-trained DeepCNF models greatly outperform existing popular predictors of these three tasks.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_1

- Download links: Code

-

Room: Belvedere2016-09-22; 17:00 - 17:20

-

Authors:

- Daniel Perry (University of Utah)

- Ross Whitaker (University of Utah)

-

Abstract:

We present an interesting modification to the traditional leverage score sampling approach by augmenting the scores with information from the data variance, which improves empirical performance on the column subsample selection problem (CSSP). We further present, to our knowledge, the first deterministic bounds for this augmented leverage score, and discuss how it compares to the traditional leverage score. We present some experimental results demonstrating how the augmented leverage score performs better than traditional leverage score sampling on CSSP in both a deterministic and probabilistic setting.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_34

-

Room: 1000B2016-09-22; 15:30 - 15:50

-

Authors:

- Tom Hope (Hebrew University)

- Dafna Shahaf (Hebrew University)

-

Abstract:

We are interested in estimating individual labels given only coarse, aggregated signal over the data points. In our setting, we receive sets ("bags") of unlabeled instances with constraints on label proportions. We relax the unrealistic assumption of known label proportions, made in previous work; instead, we assume only to have upper and lower bounds, and constraints on bag differences. We motivate the problem and propose an intuitive problem formulation and algorithm, and apply it to real-world problems. Across several domains, we show how using only proportion constraints and no labeled examples, we can achieve surprisingly high accuracy. In particular, we demonstrate how to predict income level using rough stereotypes and how to perform sentiment analysis using very little information. We also apply our method to guide exploratory analysis, recovering geographical differences in twitter dialect.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_19

-

Room: 1000A2016-09-22; 11:40 - 12:00

-

Authors:

- Xuezhi Cao (Shanghai Jiao Tong University)

- Yong Yu (Shanghai Jiao Tong University)

-

Abstract:

Most people now participate in more than one online social network (OSN). However, the alignment indicating which accounts belong to same natural person is not revealed. Aligning these isolated networks can provide united environment for users and help to improve online personalization services. In this paper, we propose a bootstrapping approach BASS to recover the alignment. It is an unsupervised general-purposed approach with minimum limitation on target networks and users, and is scalable for real OSNs. Specifically, We jointly model user consistencies of usernames, social ties, and user generated contents, and then employ EM algorithm for the parameter learning. For analysis and evaluation, We collect and publish large-scale data sets covering various types of OSNs and multi-lingual scenarios. We conduct extensive experiments to demonstrate the performance of BASS, concluding that our approach significantly outperform state-of-the-art approaches.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_29

-

Room: 1000A2016-09-20; 15:30 - 15:50

-

Authors:

- Colin Bellinger (University of Ottawa)

- Chris Drummond (National Research Council of Canada)

- Nathalie Japkowicz (University of Ottawa)

-

Abstract:

Problems of class imbalance appear in diverse domains, ranging from gene function annotation to spectra and medical classification. On such problems, the classifier becomes biased in favour of the majority class. This leads to inaccuracy on the important minority classes, such as specific diseases and gene functions. Synthetic oversampling mitigates this by balancing the training set, whilst avoiding the pitfalls of random under and oversampling. The existing methods are primarily based on the SMOTE algorithm, which employs a bias of randomly generating points between nearest neighbours. The relationship between the generative bias and the latent distribution has a significant impact on the performance of the induced classifier. Our research into gamma-ray spectra classification has shown that the generative bias applied by SMOTE is inappropriate for domains that conform to the manifold property, such as spectra, text, image and climate change classification. To this end, we propose a framework for manifold-based synthetic oversampling, and demonstrate its superiority in terms of robustness to the manifold with respect to the AUC on three spectra classification tasks and 16 UCI datasets.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_16

-

Room: 1000A2016-09-22; 15:50 - 16:10

-

Authors:

- Tim Leathart (University of Waikato)

- Bernhard Pfahringer (University of Waikato)

- Eibe Frank (University of Waikato)

-

Abstract:

A system of nested dichotomies is a method of decomposing a multi-class problem into a collection of binary problems. Such a system recursively splits the set of classes into two subsets, and trains a binary classifier to distinguish between each subset. Even though ensembles of nested dichotomies with random structure have been shown to perform well in practice, using a more sophisticated class subset selection method can be used to improve classification accuracy. We investigate an approach to this problem called random-pair selection, and evaluate its effectiveness compared to other published methods of subset selection. We show that our method outperforms other methods in many cases, and is at least on par in almost all other cases.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_12

- Download links: Code

-

Room: 1000A2016-09-20; 10:00 - 10:30

-

Authors:

- Sanjar Karaev (MPI Informatics)

- Pauli Miettinen (Max-Planck Institute for Informatics)

-

Abstract:

Subtropical algebra is a semi-ring over the nonnegative real numbers with standard multiplication and the addition defined as the maximum operator. Factorizing a matrix over the subtropical algebra gives us a representation of the original matrix with element-wise maximum over a collection of nonnegative rank-1 matrices. Such structure can be compared to the well-known Nonnegative Matrix Factorization (NMF) that gives an element-wise sum over a collection of nonnegative rank-1 matrices. Using the maximum instead of sum changes the `parts-of-whole' interpretation of NMF to `winner-takes-it-all' interpretation. We recently introduced an algorithm for subtropical matrix factorization, called Capricorn, that was designed to work on discrete-valued data with discrete noise [Karaev & Miettinen, SDM '16]. In this paper we present another algorithm, called Cancer, that is designed to work over continuous-valued data with continuous noise - arguably, the more common case. We show that Cancer is capable of finding sparse factors with excellent reconstruction error, being better than either Capricorn, NMF, or SVD in continuous subtropical data. We also show that the winner-takes-it-all interpretation is usable in many real-world scenarios and lets us find structure that is different, and often easier to interpret, than what is found by NMF.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_36

-

Room: 1000A2016-09-20; 15:50 - 16:10

-

Authors:

- Fábio Pinto (INESC TEC)

- Carlos Soares

- Joao Moreira (LIAAD-INESC TEC)

-

Abstract:

Dynamic selection or combination (DSC) methods allow to select one or more classifiers from an ensemble according to the characteristics of a given test instance x. Most methods proposed for this purpose are based on the nearest neighbors algorithm: it is assumed that if a classifier performed well on a set of instances similar to x, it will also perform well on x. We address the problem of dynamically combining a pool of classifiers by combining two approaches: metalearning and multi-label classification. Taking into account that diversity is a fundamental concept in ensemble learning and the interdependencies between the classifiers cannot be ignored, we solve the multi-label classification problem by using a widely known technique: Classifier Chains (CC). Additionally, we extend a typical metalearning approach by combining metafeatures characterizing the interdependencies between the classifiers with the base-level features. We executed experiments on 42 classification datasets and compared our method with several state-of-the-art DSC techniques, including another metalearning approach. Results show that our method allows an improvement over the other metalearning approach and is very competitive with other four DSC methods.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_26

-

Room: 300A2016-09-22; 17:20 - 17:40

-

Authors:

- Michael Kamp (Fraunhofer IAIS)

- Sebastian Bothe (Fraunhofer IAIS)

- Mario Boley (Fritz Haber Institute of the Max Planck Society)

- Michael Mock (Fraunhofer IAIS)

-

Abstract:

We propose an efficient distributed online learning protocol for low-latency real-time services. It extends a previously presented protocol to kernelized online learners for multiple dynamic data streams. While kernelized learners achieve higher predictive performance on many real-world problems, communicating the support vector expansions of their models in-between distributed learners becomes inefficient for large numbers of support vectors. We propose a strong criterion for efficiency and investigate settings in which the proposed protocol fulfills this criterion. For that, we bound its communication, provide a loss bound, and evaluate the trade-off between predictive performance and communication.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_50

-

Room: 1000A2016-09-20; 12:00 - 12:20

-

Authors:

- Krzysztof Geras (University of Edinburgh)

- Charles Sutton (University of Edinburgh)

-

Abstract:

In representation learning, it is often desirable to learn features at different levels of scale. For example, in image data, some edges will span only a few pixels, whereas others will span a large portion of the image. We introduce an unsupervised representation learning method called a composite denoising autoencoder (CDA) to address this. We exploit the observation from previous work that in a denoising autoencoder, training with lower levels of noise results in more specific, fine-grained features. In a CDA, different parts of the network are trained with different versions of the same input, corrupted at different noise levels. We introduce a novel cascaded training procedure which is designed to avoid types of bad solutions that are specific to CDAs. We show that CDAs learn effective representations on two different image data sets.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_43

-

Room: 1000A2016-09-22; 15:10 - 15:30

-

Authors:

- Krzysztof Dembczynski (Poznan University of Technology)

- Wojciech Kotlowski (Poznan University of Technology)

- Willem Waegeman (Universiteit Gent)

- Robert Busa-Fekete (Paderborn University)

- Eyke Huellermeier (Universitat Paderborn)

-

Abstract:

Label tree classifiers are commonly used for efficient multi-class and multi-label classification. They represent a predictive model in the form of a tree-like hierarchy of (internal) classifiers, each of which is trained on a simpler (often binary) subproblem, and predictions are made by (greedily) following these classifiers' decisions from the root to a leaf of the tree. Unfortunately, this approach does normally not assure consistency for different losses on the original prediction task, even if the internal classifiers are consistent for their subtask. In this paper, we thoroughly analyze a class of methods referred to as probabilistic classifier trees (PCT). Thanks to training probabilistic classifiers at internal nodes of the hierarchy, these methods allow for searching the tree-structure in a more sophisticated manner, thereby producing predictions of a less greedy nature. Our main result is a regret bound for 0/1 loss, which can easily be extended to ranking-based losses. In this regard, PCT nicely complements a related approach called filter trees (FT), and can indeed be seen as a natural alternative thereof. We compare the two approaches both theoretically and empirically.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_32

-

Room: 1000A2016-09-20; 17:00 - 17:20

-

Authors:

- John Moeller (University of Utah)

- Vivek Srikumar (University of Utah)

- Sarathkrishna Swaminathan (University of Utah)

- Suresh Venkatasubramanian (University of Utah)

- Dustin Webb (University of Utah)

-

Abstract:

Kernel learning is the problem of determining the best kernel (either from a dictionary of fixed kernels, or from a smooth space of kernel representations) for a given task. In this paper, we describe a new approach to kernel learning that establishes connections between the Fourier-analytic representation of kernels arising out of Bochner's theorem and a specific kind of feed-forward network using cosine activations. We analyze the complexity of this space of hypotheses and demonstrate empirically that our approach provides scalable kernel learning superior in quality to prior approaches.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_41

-

Room: 1000B2016-09-20; 17:20 - 17:40

-

Authors:

- Ruifei Cui (Radboud University Nijmegen)

- Perry Groot

- Tom Heskes

-

Abstract:

We propose the 'Copula PC' algorithm for causal discovery from a combination of continuous and discrete data, assumed to be drawn from a Gaussian copula model. It is based on a two-step approach. The first step applies Gibbs sampling on rank-based data to obtain samples of correlation matrices. These are then translated into an average correlation matrix and an effective number of data points, which in the second step are input to the standard PC algorithm for causal discovery. A stable version naturally arises when rerunning the PC algorithm on different Gibbs samples. Our 'Copula PC' algorithm extends the 'Rank PC' algorithm, which has been designed for Gaussian copula models for purely continuous data. In simulations, 'Copula PC' indeed outperforms 'Rank PC' in cases with mixed variables, in particular for larger numbers of data points, at the expense of a slight increase in computation time.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_24

-

Room: 300B2016-09-22; 12:00 - 12:20

-

Authors:

- Romain Tavenard (LETG-Rennes COSTEL /IRISA - Univ. Rennes 2)

- Simon Malinowski (IRISA/ Univ. Rennes 1)

-

Abstract:

In time-series classification, two antagonist notions are at stake. On the one hand, in most cases, the sooner the time series is classified, the more rewarding. On the other hand, an early classification is more likely to be erroneous. Most of the early classification methods have been designed to take a decision as soon as sufficient level of accuracy is reached. However, in many applications, delaying the decision can be costly. Recently, a framework dedicated to optimizing a trade-off between classification accuracy and the cost of delaying the decision was proposed in [3], together with an algorithm that decides online the optimal time instant to classify an incoming time series. On top of this framework, we build in this paper two different early classification algorithms that optimize a trade-off between decision accuracy and the cost of delaying the decision. As in [3], these algorithms are non-myopic in the sense that, even when classification is delayed, they can provide an estimate of when the optimal classification time is likely to occur. Our experiments on real datasets demonstrate that the proposed approaches are more robust than that of [3].

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_40

- Download links: Code Data

-

Room: 1000A2016-09-21; 12:30 - 12:50

-

Authors:

- Masamichi Shimosaka (Tokyo Institute of Technology)

- Takeshi Tsukiji (The University of Tokyo)

- Shoji Tominaga

- Kota Tsubouchi (Yahoo Japan Research)

-

Abstract:

In this paper, we propose a nonparametric Bayesian mixture model that simultaneously optimizes the topic extraction and group clustering while allowing all topics to be shared by all clusters for grouped data. In addition, in order to enhance the computational efficiency on par with today’s large-scale data, we formulate our model so that it can use a closed-form variational Bayesian method to approximately calculate the posterior distribution. Experimental results with corpus data show that our model has a better performance than existing models, achieving 22% improvement against state-of-the-art model. Moreover, an experiment with location data from mobile phones shows that our model performs well in the field of big data analysis.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_15

-

Room: 1000A2016-09-22; 16:40 - 17:00

-

Authors:

- Subhabrata Mukherjee (Max Planck Informatics)

- Sourav Dutta (MPI-INF)

- Gerhard Weikum (Max Planck Institute for Informatics, Germany)

-

Abstract:

Online reviews provide viewpoints on the strengths and shortcomings of products/services, possibly influencing customers’ purchase decisions. However, there is an increasing proportion of non-credible reviews — either fake (promoting/demoting an item), incompetent (involving irrelevant aspects), or biased — entailing the problem of identifying credible reviews. Prior works involve classifiers harnessing information about items and users, but fail to provide interpretability as to why a review is deemed non-credible. This paper presents a novel approach to address the above issues. We utilize latent topic models leveraging review texts, item ratings, and timestamps to derive consistency features without relying on item/user histories, unavailable for “long tail” items/users. We develop models, for computing review credibility scores to provide interpretable evidence for non-credible reviews, that are also transferable to other domains — addressing the scarcity of labeled data. Experiments on real review datasets demonstrate improvements over state-of-the-art baselines.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_13

-

Room: 1000A2016-09-20; 12:40 - 13:00

-

Authors:

- Wenlin Wang (Duke University)

- Changyou Chen (Duke University)

- Wenlin Chen (Washington University in St. Louis)

- Lawrence Carin (Duke University)

-

Abstract:

We propose Deep Stochastic Neighbor Compression (DSNC), a data compression algorithm that learns a subset of compressed data in the feature space induced by a deep neural network. The learned compressed data is a small representation of the entire data, enabling the traditional kNN for fast testing and significantly boosted test accuracy. We conduct comprehensive empirical evalua- tion on several benchmark datasets and show that DSNC achieves unprecedented test accuracy and compression ratio compared to related methods.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_49

- Download links: Code

-

Room: 1000A2016-09-22; 11:20 - 11:40

-

Authors:

- Han Xiao (Aalto University)

- Polina Rozenshtein

- Aristides Gionis (Aalto University)

-

Abstract:

With the increasing use of online communication platforms, such as email, twitter, and messaging applications, we are faced with a growing amount of data that combine content(what is said), time(when), and user(by whom) information. An important computational challenge is to analyze these data, discover meaningful patterns, and understand what is happening. We consider the problem of mining online communication data and find top-k temporal events. An event is a topic that is discussed frequently, in a relatively short time span, while the information ow respects the underlying network structure. We construct our model for detecting temporal events in two steps. We first introduce the notion interaction meta-graph, which connects associated interactions. Using this notion, we define a temporal event to be a subset of interactions that (i) are topically and temporally close and (ii) correspond to tree that captures the information ow. The problem of finding the best temporal event leads to budget version of prize-collecting Steiner-tree (PCST) problem, which we solve using three di erent methods: a greedy approach, a dynamic-programming algorithm, and an adaptation to an existing approximation algorithm. The problem of finding the top-k events among a set of candidate events maps to maximum set-cover problem, and thus, solved by greedy. We compare and analyze our algorithms in both synthetic and real datasets, such as twitter and email communication. The results show that our methods are able to detect meaningful temporal events.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_43

- Download links: Code

-

Room: 300B2016-09-22; 15:30 - 15:50

-

Authors:

- Boris Cule (University of Antwerp)

- Len Feremans (University of Antwerp)

- Bart Goethals (University of Antwerp)

-

Abstract:

Discovering patterns in long event sequences is an important data mining task. Most existing work focuses on frequency-based quality measures that allow algorithms to use the anti-monotonicity property to prune the search space and efficiently discover the most frequent patterns. In this work, we step away from such measures, and evaluate patterns using cohesion --- a measure of how close to each other the items making up the pattern appear in the sequence on average. We tackle the fact that cohesion is not an anti-monotonic measure by developing a novel pruning technique in order to reduce the search space. By doing so, we are able to efficiently unearth rare, but strongly cohesive, patterns that existing methods often fail to discover.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_23

- Download links: Code Data

-

Room: 300B2016-09-20; 11:00 - 11:20

-

Authors:

- Tian Guo (EPFL)

- Konstantin Kutzkov

- mohamed Ahmed (NEC Lab, Europe)

- jean-paul Calbimonte (EPFL)

- Karl Aberer (EPFL)

-

Abstract:

The availability of massive volumes of data and recent advances in data collection and processing platforms have motivated the development of distributed machine learning algorithms. In numerous real-world applications large datasets are inevitably noisy and contain outliers. These outliers can dramatically degrade the performance of standard machine learning approaches such as regression trees. To this end, we present a novel distributed regression tree approach that utilizes robust regression statistics, statistics that are more robust to outliers, for handling large and noisy data. We propose to integrate robust statistics based error criteria into the regression tree. A data summarization method is developed and used to improve the efficiency of learning regression trees in the distributed setting. We implemented the proposed approach and baselines based on Apache Spark, a popular distributed data processing platform. Extensive experiments on both synthetic and real datasets verify the effectiveness and efficiency of our approach.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_6

- Download links: Code Data

-

Room: 300B2016-09-20; 17:20 - 17:40

-

Authors:

- Senzhang Wang (Beihang University)

- Fengxiang Li (Peking University)

- Leon Stenneth (Nokia's HERE Connected Driving)

- Philip Yu (University of Illinois at Chicago)

-

Abstract:

Estimating traffic conditions in arterial networks with probe data is a practically important while substantially challenging problem. Limited by the lack of reliability and low sampling frequency of GPS probe, probe data are usually not sufficient for fully estimating traffic conditions for a large arterial network. For the first time, this paper studies how to explore social media as an auxiliary data source and incorporate it with probe data to enhance traffic congestion estimation. Motivated by the increasingly available traffic information in Twitter, we first extensively collect tweets that report various traffic events such as congestion, accident, and road construction. Next we propose an extended Coupled Hidden Markov Model to estimate traffic conditions of an arterial network by effectively combining the two types of data. To address the computational challenge, a sequential importance sampling based EM algorithm is also given. Evaluations on the arterial network of Chicago demonstrate the effectiveness of the proposed method.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_16

-

Room: 1000B2016-09-22; 17:20 - 17:40

-

Authors:

- Jon Parkinson (The University of Manchester)

- Ubai Sandouk (University of Manchester)

- Ke Chen (University of Manchester)

-

Abstract:

Guiding representation learning towards temporally stable features improves object identity encoding from video. Existing models have applied temporal coherence uniformly over all features based on the assumption that optimal object identity encoding only requires temporally stable components. We explore the effects of mixing temporally coherent ‘invariant’ features alongside ‘variable’ features in a single representation. Applying temporal coherence to different proportions of available features, we introduce a mixed representation autoencoder. Trained on several datasets, model outputs were passed to an object classification task to compare performance. Whilst the inclusion of temporal coherence improved object identity recognition in all cases, the majority of tests favoured a mixed representation.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_22

-

Room: 1000A2016-09-20; 14:50 - 15:10

-

Authors:

- Maxime Gasse (Université Lyon 1)

- Alex Aussem (Université Lyon 1)

-

Abstract:

We discuss a method to improve the exact F-measure maximization algorithm called GFM, proposed in \autocite{conf/nips/DembczynskiWCH11} for multi-label classification, assuming the label set can be can partitioned into conditionally independent subsets given the input features. If the labels were all independent, the estimation of only $m$ parameters ($m$ denoting the number of labels) would suffice to derive Bayes-optimal predictions in $O(m^2)$ operations~\autocite{conf/icml/NanCLC12}. In the general case, $m^2 + 1$ parameters are required by GFM, to solve the problem in $O(m^3)$ operations. In this work, we show that the number of parameters can be reduced further to $m^2/n$, in the best case, assuming the label set can be partitioned into $n$ conditionally independent subsets. As this label partition needs to be estimated from the data beforehand, we use first the procedure proposed in~\autocite{conf/icml/GasseAE15} that finds such partition and then infer the required parameters locally in each label subset. The latter are aggregated and serve as input to GFM to form the Bayes-optimal prediction. We show on a synthetic experiment that the reduction in the number of parameters brings about significant benefits in terms of performance.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_39

- Download links: Code

-

Room: 300B2016-09-22; 11:20 - 11:40

-

Authors:

- Ali Pesaranghader (University of Ottawa)

- Herna Viktor

-

Abstract:

Decision makers increasingly require near-instant models to make sense of fast evolving data streams. Learning from such evolutionary environments is, however, a challenging task. This challenge is partially due to the fact that the distribution of data often changes over time, thus potentially leading to degradation in the overall performance. In particular, classification algorithms need to adapt their models after facing such distributional changes (also referred to as concept drifts). Usually, drift detection methods are utilized in order to accomplish this task. It follows that detecting concept drifts as soon as possible, while resulting in fewer false positives and false negatives, is a major objective of drift detectors. To this end, we introduce the Fast Hoeffding Drift Detection Method (FHDDM) which detects the drift points using a sliding window and Hoeffding's inequality. FHDDM detects a drift when a significant difference between the maximum probability of correct predictions and the most recent probability of correct predictions is observed. Experimental results confirm that FHDDM detects drifts with less detection delays, less false positives and less false negatives, when compared to the state-of-the-art.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_7

-

Room: 300B2016-09-20; 17:00 - 17:20

-

Authors:

- Yuchen Wang (Shanghai Jiao Tong University)

- Kan Ren (Shanghai Jiao Tong University)

- Weinan Zhang (University College London)

- Jun Wang (UCL)

- Yong Yu (Shanghai Jiao Tong University)

-

Abstract:

Real-time auction has become an important online advertising trading mechanism. A crucial issue for advertisers is to model the market competition, i.e., bid landscape forecasting. It is formulated as predicting the market price distribution for each ad auction provided by its side information. Existing solutions mainly focus on parameterized heuristic forms of the market price distribution and learn the parameters to fit the data. In this paper, we present a functional bid landscape forecasting method to automatically learning the function mapping from each ad auction features to the market price distribution without any assumption about the functional form. Specifically, to deal with the categorical feature input, we propose a novel decision tree model with a node splitting scheme by attribute value clustering. Furthermore, to deal with the problem of right-censored market price observations, we propose to incorporate a survival model into tree learning and prediction, which largely reduces the model bias. The experiments on real-world data demonstrate that our models achieve substantial performance gains over previous work in various metrics.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_8

- Download links: Code

-

Room: 1000B2016-09-20; 17:00 - 17:20

-

Authors:

- Srijith P.K. (University of Sheffield)

- Balamurugan P (INRIA-ENS, Paris)

- Shirish Shevade

-

Abstract:

Several machine learning problems arising in natural language processing can be modelled as a sequence labelling problem. Gaussian processes (GPs) provide a Bayesian approach to learning such problems in a kernel based framework. We propose Gaussian process models based on a pseudo-likelihood to solve sequence labelling problems. The pseudo-likelihood model enables one to capture multiple dependencies among the output components of the sequence without becoming computationally intractable. We use an efficient variational Gaussian approximation method to perform inference in the proposed model. We also provide an iterative algorithm which can effectively make use of the information from the neighbouring labels to perform prediction. The ability to capture multiple dependencies makes the proposed approach useful for a wide range of sequence labelling problems. Numerical experiments on some sequence labelling problems in natural language processing demonstrate the usefulness of the proposed approach.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_14

-

Room: 300A2016-09-22; 15:10 - 15:30

-

Authors:

- Peng Yan (NJUPT)

- Yun Li (NJUPT)

-

Abstract:

Since instances in multi-label problems are associated with several labels simultaneously, most traditional feature selection algorithms for single label problems are inapplicable. Therefore, new criteria to evaluate features and new methods to model label correlations are needed. In this paper, we adopt the graph model to capture the label correlation, and propose a feature selection algorithm for multi-label problems according to the graph combining with the large margin theory. The proposed multi-label feature selection algorithm GMBA can efficiently utilize the high order label correlation. Experiments on real world data sets demonstrate the effectiveness of the proposed method.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_34

- Download links: Code

-

Room: 1000A2016-09-21; 16:40 - 17:00

-

Authors:

- Branislav Kveton (Adobe Research, USA)

- Hung Bui (Adobe Research, USA)

- Mohammad Ghavamzadeh

- Georgios Theocharous (Adobe Research, USA)

- S Muthukrishnan

- Siqi Sun (TTIC)

-

Abstract:

Structured high-cardinality data arises in many domains and poses a major challenge for both modeling and inference. Graphical models are a popular approach to modeling structured data but they are not suitable for high-cardinality variables. The count-min (CM) sketch is a popular approach to estimating probabilities in high-cardinality data but it does not scale well beyond a few variables. In this paper, we bring together graphical models and count sketches, and address the problem of estimating probabilities in structured high-cardinality data. We view these data as a stream $(x^{(t)})_{t = 1}^n$ of $n$ observations from an unknown distribution $P$, where $x^{(t)} \in [M]^K$ is a $K$-dimensional vector and $M$ is the cardinality of its entries, which is very large. Suppose that the graphical model $\mathcal{G}$ of $P$ is known, and let $\bar{P}$ be the maximum-likelihood estimate (MLE) of $P$ from $(x^{(t)})_{t = 1}^n$ conditioned on $\mathcal{G}$. We design and analyze algorithms that approximate any $\bar{P}$ with $\hat{P}$, such that $\hat{P}(x) \approx \bar{P}$ for any $x \in [M]^K$ with at least $1 - \delta$ probability, in the space independent of $M$. The key idea of our approximations is to use the structure of $\mathcal{G}$ and approximately estimate its factors by ``sketches''. The sketches hash high-cardinality variables using random projections. Our approximations are computationally and space efficient, being independent of $M$. Our error bounds are multiplicative and significantly improve over those of the CM sketch, a state-of-the-art approach to estimating the frequency of values in streams. We evaluate our algorithms on synthetic and real-world problems, and report an order of magnitude improvements over the CM sketch.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_6

-

Room: 1000A2016-09-20; 17:40 - 18:00

-

Authors:

- Oleksandr Zadorozhnyi (University of Potsdam)

- Gundhart Benecke (Uni Potsdam)

- Stephan Mandt (Columbia University)

- Tobias Scheffer (Uni Potsdam)

- Marius Kloft (HU Berlin)

-

Abstract:

In order to avoid overfitting, it is a common practice to regularize linear classification models using squared or absolute-value norms as regularization terms. In our article we consider a new method of regularization in the SVM framework: Huber-norm regularization imposes a mixture of $\ell_1$ and $\ell_2$-norm regularization on the features. We derive the dual optimization problem, prove an upper bound on the statistical risk of the model class by means of Rademacher Complexity and establish a simple type of oracle inequality on the optimality of the decision rule. Empirically, we observe that the Huber-norm regularizer outperforms $\ell_1$-norm, $\ell_2$-norm, and elastic-net regularization for a wide range of benchmark data sets.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_45

-

Room: 1000A2016-09-20; 16:40 - 17:00

-

Authors:

- Aniket Chakrabarti (The Ohio State University)

- Bortik Bandyopadhyay (The Ohio State University)

- Srinivasan Parthasarathy

-

Abstract:

We present a novel data embedding that signi cantly reduces the estimation error of locality sensitive hashing (LSH) technique when used in reproducing kernel Hilbert space (RKHS). Efficient and accurate kernel approximation techniques either involve the kernel principal component analysis (KPCA) approach or the Nyström approximation method. In this work we show that extant LSH methods in this space su er from a bias problem, that moreover is difficult to estimate apriori. Consequently, the LSH estimate of a kernel is different from that of the KPCA/Nyström approximation. We provide theoretical rationale for this bias, which is also confirmed empirically. We propose an LSH algorithm that can reduce this bias and consequently our approach can match the KPCA or the Nyström methods' estimation accuracy while retaining the traditional benefits of LSH. We evaluate our algorithm on a wide range of realworld image datasets (for which kernels are known to perform well) and show the efficacy of our algorithm using a variety of principled evaluations including mean estimation error, KL divergence and the Kolmogorov-Smirnov test.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_40

-

Room: 300A2016-09-20; 11:00 - 11:20

-

Authors:

- Roberto Souza (UFMG)

- Renato Assunção (UFMG)

- Derick Oliveira (UFMG)

- Denise Brito (UFMG)

- Meira Wagner (Federal University of Minas Gerais)

-

Abstract:

Traditionally, in health surveillance, high risk zones are identified based only on the residence address or the working place of diseased individuals. This provides little information about the places where people are infected, the truly important information for disease control. The recent availability of spatial data generated by geotagged social media posts offers a unique opportunity: by identifying and following diseased individuals, we obtain a collection of sequential geo-located events, each sequence being issued by a social media user. The sequence of map positions implicitly provides an estimation of the users' social trajectories as they drift on the map. The existing data mining techniques for spatial cluster detection fail to address this new setting as they require a single location to each individual under analysis. In this paper we present two stochastic models with their associated algorithms to mine this new type of data. The Visit Model finds the most likely zones that a diseased person visits, while the Infection Model finds the most likely zones where a person gets infected while visiting. We demonstrate the applicability and effectiveness of our proposed models by applying them to more than 100 million geotagged tweets from Brazil in 2015. In particular, we target the identification of infection hot spots associated with dengue, a mosquito-transmitted disease that affects millions of people in Brazil annually, and billions worldwide. We applied our algorithms to data from 11 large cities in Brazil and found infection hot spots, showing the usefulness of our methods for disease surveillance.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_46

-

Room: 1000B2016-09-22; 15:50 - 16:10

-

Authors:

- Shankar Vembu (University of Toronto)

- Sandra Zilles (University of Regina)

-

Abstract:

Interactive learning is a process in which a machine learning algorithm is provided with meaningful, well-chosen examples as opposed to randomly chosen examples typical in standard supervised learning. In this paper, we propose a new method for interactive learning from multiple noisy labels where we exploit the disagreement among annotators to quantify the easiness (or meaningfulness) of an example. We demonstrate the usefulness of this method in estimating the parameters of a latent variable classification model, and conduct experimental analyses on a range of synthetic and benchmark data sets. Furthermore, we theoretically analyze the performance of perceptron in this interactive learning framework.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46128-1_31

-

Room: 1000A2016-09-21; 10:50 - 11:10

-

Authors:

- Kai Puolamaki (Finnish Institute of Occupational Health)

- Bo Kang (Ghent University)

- Jefrey Lijffijt (Ghent University)

- Tijl De Bie (Ghent University)

-

Abstract:

Data visualization and iterative/interactive data mining are growing rapidly in attention, both in research as well as in industry. However, integrated methods and tools that combine advanced visualization and data mining techniques are rare, and those that exist are often specialized to a single problem or domain. In this paper, we introduce a novel generic method for interactive visual exploration of high-dimensional data. In contrast to most visualization tools, it is not based on the traditional dogma of manually zooming and rotating data. Instead, the tool initially presents the user with an `interesting' projection of the data and then employs data randomization with constraints to allow users to flexibly and intuitively express their interests or beliefs using visual interactions that correspond to exactly defined constraints. These constraints expressed by the user are then taken into account by a projection-finding algorithm to compute a new `interesting' projection, a process that can be iterated until the user runs out of time or finds that constraints explain everything she needs to find from the data. We present the tool by means of two case studies, one controlled study on synthetic data and another on real census data.

- Springer Link: http://link.springer.com/chapter/10.1007/978-3-319-46227-1_14

- Download links: Code Data

-

Room: 1000A2016-09-22; 15:30 - 15:50

-

Authors:

- Ibrahim Alabdulmohsin (KAUST)